Pytorch基础知识(一)

pytorch环境部署

安装conda

conda 是一个开源的软件包管理系统和环境管理软件,用于安装多个版本的软件包及其依赖关系,并在它们之间轻松切换。conda 是为Python程序创建的,类似于 Linux、MacOS、Windows,也可以打包和分发其他软件。

注意:必须在 cmd 里面才可以,在 powershell 里面输入命令有些是无效的

创建Python环境

创建环境

1 | conda create -n name python=3.8 ##示例,实际根据需求选择Python版本以及设置环境名称 |

激活环境

1 | conda activate name |

退出环境

1 | conda deavtivate |

查看环境下包的信息

1 | pip list # 列出pip环境里的所有包 |

安装NVIDIA CUDA以及CUDNN(若没有GPU可以跳过本步骤)

查看本机GPU信息

在CMD控制台中输入以下命令就可以查看本机GPU型号以及其适配CUDA型号信息

1 | nvidia-smi # 查看本机GPU型号以及其适配CUDA的信息 |

安装CUDA

- 下载CUDA安装包,下载地址:https://developer.nvidia.com/cuda-downloads

- 选择Windows或者Linux,选择对应版本的CUDA安装包

- 安装CUDA安装包

- 默认安装即可,如果出现错误,可以参考:https://blog.csdn.net/weixin_53762670/article/details/131845364

验证CUDA安装是否成功

- 打开cmd,输入以下命令如果出现以下信息,则说明安装成功

1

nvcc -V

1

2

3

4nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Wed_Jun__2_19:15:15_Pacific_Daylight_Time_2021

Cuda compilation tools, release 11.5, V11.5.119

- 打开cmd,输入以下命令

安装CUDNN

- 下载CUDNN安装包,下载地址:https://developer.nvidia.com/cudnn

- 选择对应CUDA版本的CUDNN安装包

- 将下载后的压缩包解压到CUDA安装的根目录下,例如:C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.5

- 验证CUDNN安装是否成功

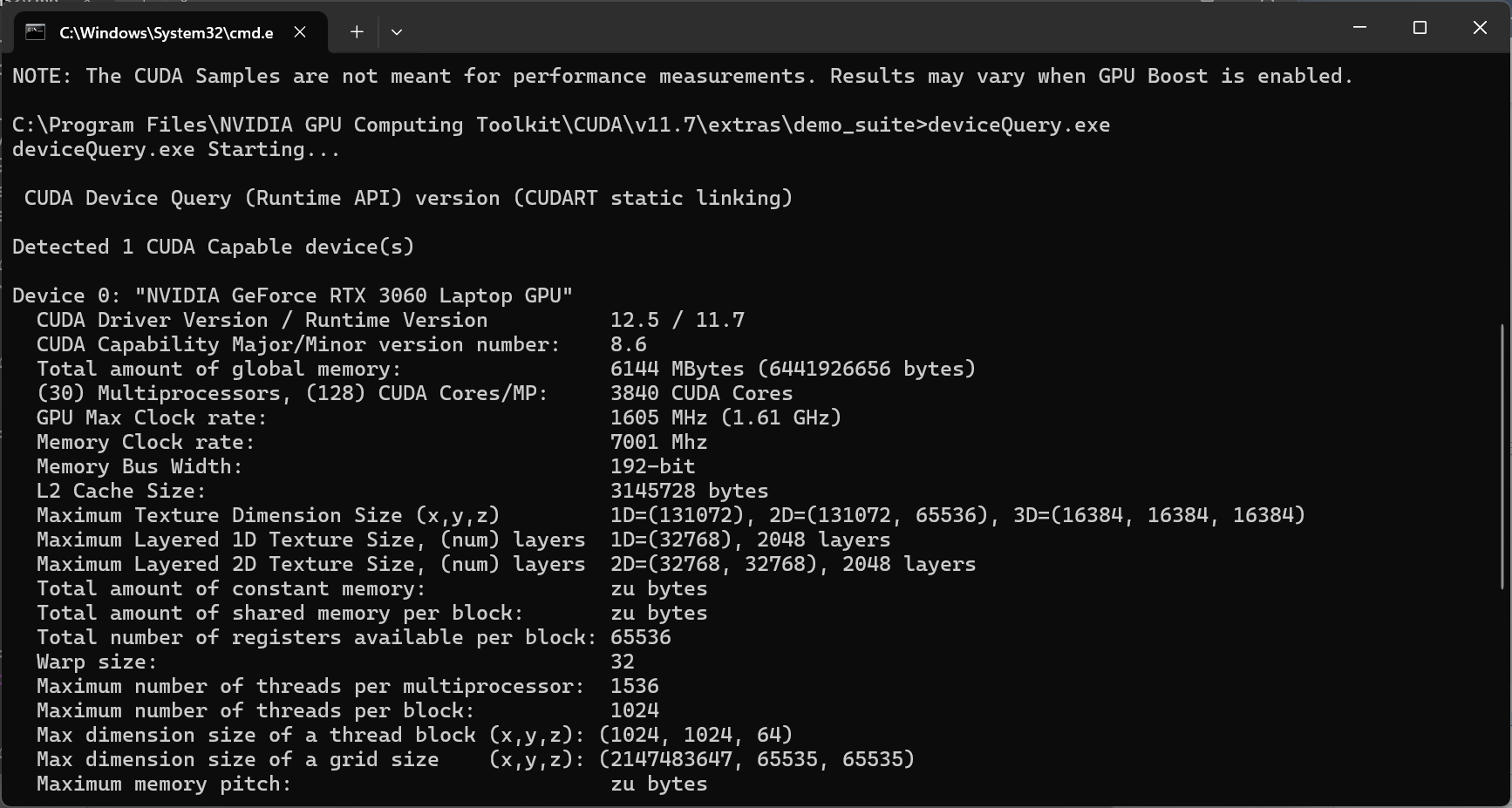

- 进入到C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.7\extras\demo_suite 目录下,在上方文件路径中输入CMD,

然后回车,进入到该目录命令窗口下,首先输入bandwidth.exe,如果出现以下信息,则说明安装成功 - 然后在输入deviceQuery.exe,如果出现以下信息,则说明安装成功

- 进入到C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.7\extras\demo_suite 目录下,在上方文件路径中输入CMD,

安装Pytorch

安装Pytorch相关包

输入以下命令安装Pytorch

1 | conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia ## 注意pytorch-cuda版本替换为自己实际的CUDA版本 |

验证Pytorch安装是否成功

- 打开cmd,输入以下命令

1

2

3

4conda activate name # 激活对应环境

python

>> import torch

>> torch.cuda.is_available() - 如果出现以下信息,则说明安装成功,可以使用GPU进行训练

1

2

3>> import torch

>> torch.cuda.is_available()

True处理数据

数据集的加载

Dataset介绍:1

2

3

4

5

6

7

8

9

10

11import torchvision

from torchvision import transforms

trans = transforms.Compose([

transforms.ToTensor()

])

train_set = torchvision.datasets.CIFAR10(root="./dataset",train=True,transform=trans,download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=trans,download=True)

img,target = test_set[0]

print(img)

print(target)

print(test_set.classes[target])- Dataset 是一个抽象类,不能直接使用,需要继承 Dataset 类,然后重写 getitem 和 len 方法,这两个方法都是抽象方法,必须实现。

- getitem 方法用于获取数据集的某一个样本,返回一个样本。

- len 方法用于获取数据集的长度,返回一个整数。DataLoader介绍:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25from torch.utils.data import Dataset

import cv2

from PIL import Image

import os

help(Dataset)

class mydata(Dataset):

def __init__(self,root_dir,label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir,self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self, idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir,self.label_dir,self.img_path)

img = Image.open(img_item_path)

label = self.label_dir

return img,label

def __len__(self):

return len(self.img_path)

ants_dataset = mydata(root_dir,label_dir)

img, label = ants_dataset[0]

img.show()

bees_dataset = mydata(root_dir,label_dir)

all_train = ants_dataset+bees_dataset //拼接数据集

len(all_train) - DataLoader 是一个迭代器,用于加载数据集。 可以通过 DataLoader 的参数设置,如 batch_size、shuffle、num_workers 等,来控制加载数据的方式。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24import torchvision

from torchvision import transforms

from torch.utils.data.dataloader import DataLoader

trans = transforms.Compose([

transforms.ToTensor()

])

train_set = torchvision.datasets.CIFAR10(root="./dataset",train=True,transform=trans,download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=trans,download=True)

print(type(test_set))

loader = DataLoader(dataset=test_set,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

img,target = test_set[0]

torch.Size([3, 32, 32])

3

print(img.shape)

print(target)

for data in loader:

imgs,targets =data

torch.Size([64, 3, 32, 32])

torch.Size([64])

print(imgs.shape)

print(targets.shape)

break搭建网络

nn.Module介绍: - nn.Module 是一个抽象类,不能直接使用,需要继承 nn.Module 类,然后重写 forward 方法,这个方法就是网络结构。

- forward 方法用于定义网络结构,返回一个张量。搭建网络:

1

2

3

4

5

6

7

8

9

10

11

12

13from torch import nn

import torch

class mymodel(nn.Module):

def __init__(self) :

super(mymodel,self).__init__()

def forward(self,x):

y = x+1

return y

a = mymodel()

x = torch.tensor([10,20,30])

print(a(x))损失函数:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95import torch

import torchvision

import torch.nn as nn

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.nn import ReLU

from torch.nn import Flatten

from torch.nn import Linear

from torch.nn import Sigmoid

from torch.nn import Sequential

from torch.utils.data.dataloader import DataLoader

from tensorboardX import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

class mod(nn.Module):

def __init__(self):

super(mod,self).__init__()

self.conv1 = Conv2d(3,32,5,stride=1,padding=2)

self.max_pool2d = MaxPool2d(2)

self.conv2 = Conv2d(32,32,5,padding=2)

self.max_pool2d2 = MaxPool2d(2)

self.conv3 = Conv2d(32,64,5,padding=2)

self.max_pool2d3 = MaxPool2d(2)

self.flatten = Flatten()

self.Linear = Linear(1024,64)

self.Linear2 = Linear(64,10)

self.model1 = Sequential(

Conv2d(3,32,5,stride=1,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,64,5,padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10),

)

def forward(self,x):

# x=self.conv1(x)

# x=self.max_pool2d(x)

# x=self.conv2(x)

# x=self.max_pool2d2(x)

# x=self.conv3(x)

# x=self.max_pool2d3(x)

# x=self.flatten(x)

# x=self.Linear(x)

# x=self.Linear2(x)

x = self.model1(x)

return x

modshi = mod()

print(modshi)

x = torch.ones((64,3,32,32))

out = modshi(x)

for data in dataloader:

imgs,targers = data

out = modshi(imgs)

print(out.shape)

break

可视化网络

writer = SummaryWriter("logs")

writer.add_graph(modshi,x)

writer.close()

mod(

(conv1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(max_pool2d): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(max_pool2d2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(conv3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(max_pool2d3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(Linear): Linear(in_features=1024, out_features=64, bias=True)

(Linear2): Linear(in_features=64, out_features=10, bias=True)

(model1): Sequential(

(0): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(4): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Flatten(start_dim=1, end_dim=-1)

(7): Linear(in_features=1024, out_features=64, bias=True)

(8): Linear(in_features=64, out_features=10, bias=True)

)

)

torch.Size([64, 10])优化器:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24import torch

from torch.nn import L1Loss

from torch.nn import MSELoss

from torch.nn import CrossEntropyLoss

x = torch.tensor([1,2,3],dtype=torch.float32)

y = torch.tensor([1,2,5],dtype=torch.float32)

x = torch.reshape(x,(1,1,1,3))

y = torch.reshape(y,(1,1,1,3))

loss = L1Loss(reduce='sum')

result = loss(x,y)

print(result)

loss = MSELoss()

result = loss(x,y)

print(result)

x = torch.tensor([0.1,0.2,0.3])

y = torch.tensor([1])

x = torch.reshape(x,(1,3))

loss = CrossEntropyLoss()

result = loss(x,y)

print(result)GPU的使用:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52import torch

import torchvision

import torch.nn as nn

from torch.nn import Conv2d

from torch.nn import MaxPool2d

from torch.nn import ReLU

from torch.nn import Flatten

from torch.nn import Linear

from torch.nn import Sigmoid

from torch.nn import Sequential

from torch.utils.data.dataloader import DataLoader

from torch.nn import CrossEntropyLoss

from torch.optim import SGD

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

class mod(nn.Module):

def __init__(self):

super(mod,self).__init__()

self.model1 = Sequential(

Conv2d(3,32,5,stride=1,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,64,5,padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10),

)

def forward(self,x):

x = self.model1(x)

return x

modshi = mod()

loss = CrossEntropyLoss()

optim = SGD(modshi.parameters(),lr=0.01)

for epoch in range(20):

runing_loss = 0.0

for data in dataloader:

imgs,targers = data

out = modshi(imgs)

loss1 = loss(out,targers)

optim.zero_grad()

loss1.backward()

optim.step()

runing_loss = runing_loss+loss1

print(runing_loss)1

2

3

4fff = model.cuda()

loss_fn = loss.cuda()

imgs = imgs.cuda()

targets = targets.cuda()现有模型使用:1

2

3device = torch.device("cuda")

model = model.to(device)

imgs = imgs.to(device)保存读取网络:1

2

3

4

5

6

7

8

9

10vgg16_true = torchvision.models.vgg16(pretrained=True)

vgg16_false = torchvision.models.vgg16(pretrained=False)

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

在最后一层加先行层从1000-》10

vgg16_true.add_module('add',nn.Linear(1000,10))

修改vgg最后一层

vgg16_false.classifier[6] = nn.Linear(4096,10)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16import torch

import torchvision

vgg16 = torchvision.models.vgg16(pretrained=True)

保存1,模型结构和参数,需要模型定义的代码

torch.save(vgg16,'vgg16.pth')

加载1

model = torch.load('vgg16.pth')

print(model)

保存2,保存参数字典形式

torch.save(vgg16.state_dict(),"vgg.pth")

加载2

model = torchvision.models.vgg16()

model.load_state_dict(torch.load('vgg.pth'))

完整的训练验证流程

1 | model.py |

1 | main.py |

1 | test.py |

评论